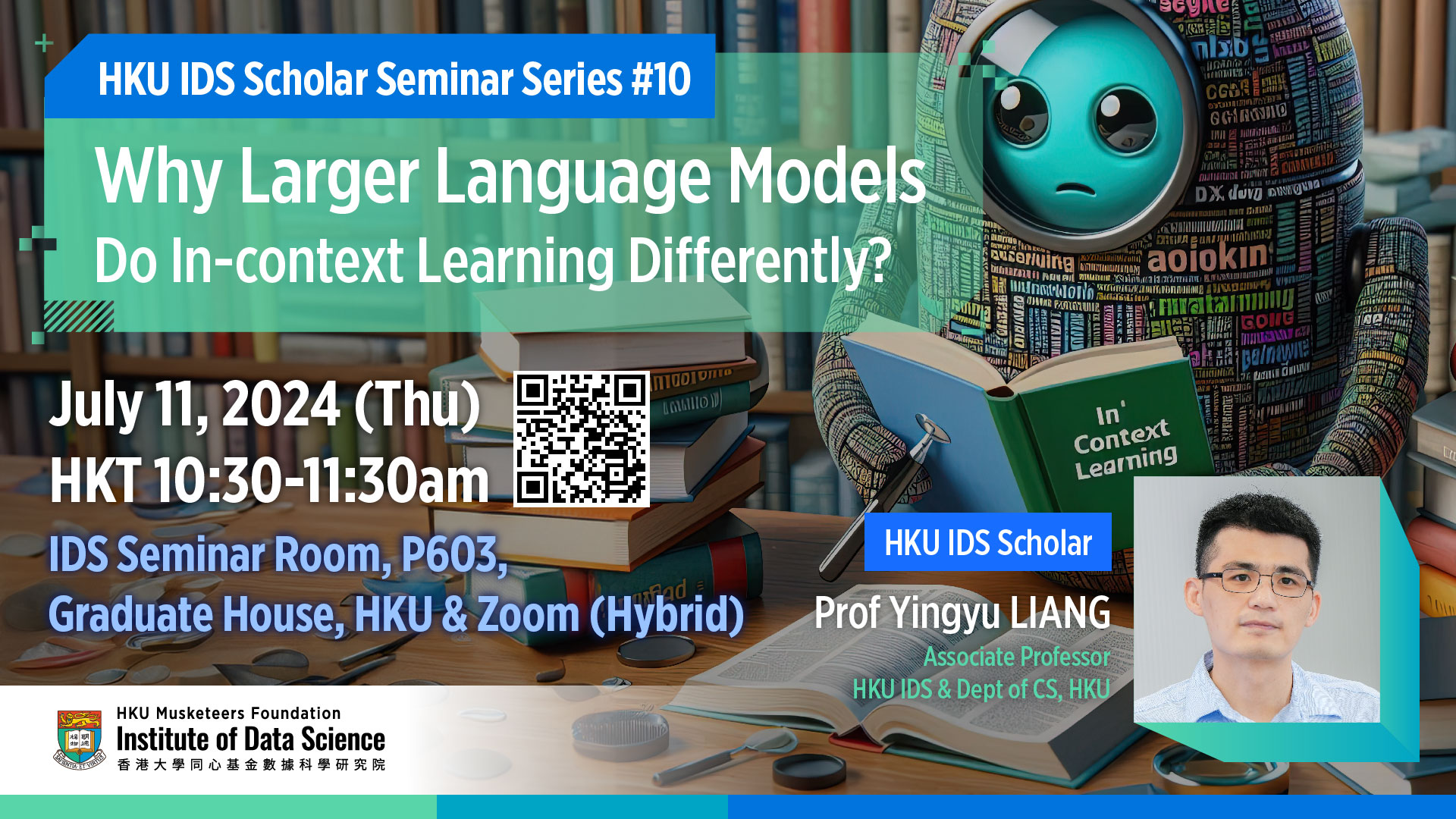

HKU IDS Scholar Seminar Series #10: Why Larger Language Models Do In-context Learning Differently?

Venue: IDS Seminar Room, P603, Graduate House / Zoom

Mode: Hybrid. Seats for on-site participants are limited. A confirmation email will be sent to participants who have successfully registered.

Abstract

Speaker

Moderator

Professor Yi Ma is a Chair Professor in the Musketeers Foundation Institute of Data Science (HKU IDS) and Department of Computer Science at the University of Hong Kong. He took up the Directorship of HKU IDS on January 12, 2023. He is also a Professor at the Department of Electrical Engineering and Computer Sciences at the University of California, Berkeley. He has published about 60 journal papers, 120 conference papers, and three textbooks in computer vision, generalized principal component analysis, and high-dimensional data analysis.

Professor Ma’s research interests cover computer vision, high-dimensional data analysis, and intelligent systems. For full biography of Professor Ma, please refer to: https://datascience.hku.hk/people/yi-ma/

For information, please contact:

Email: datascience@hku.hk

- June 27, 2024

- Events, Gallery, Past Events, SRA: Fundamental Data Science

- HKU IDS Scholar Seminar Series