HKU-IDS Scholar

Department of Mathematics, HKU

Mathematical Optimization, Machine Learning

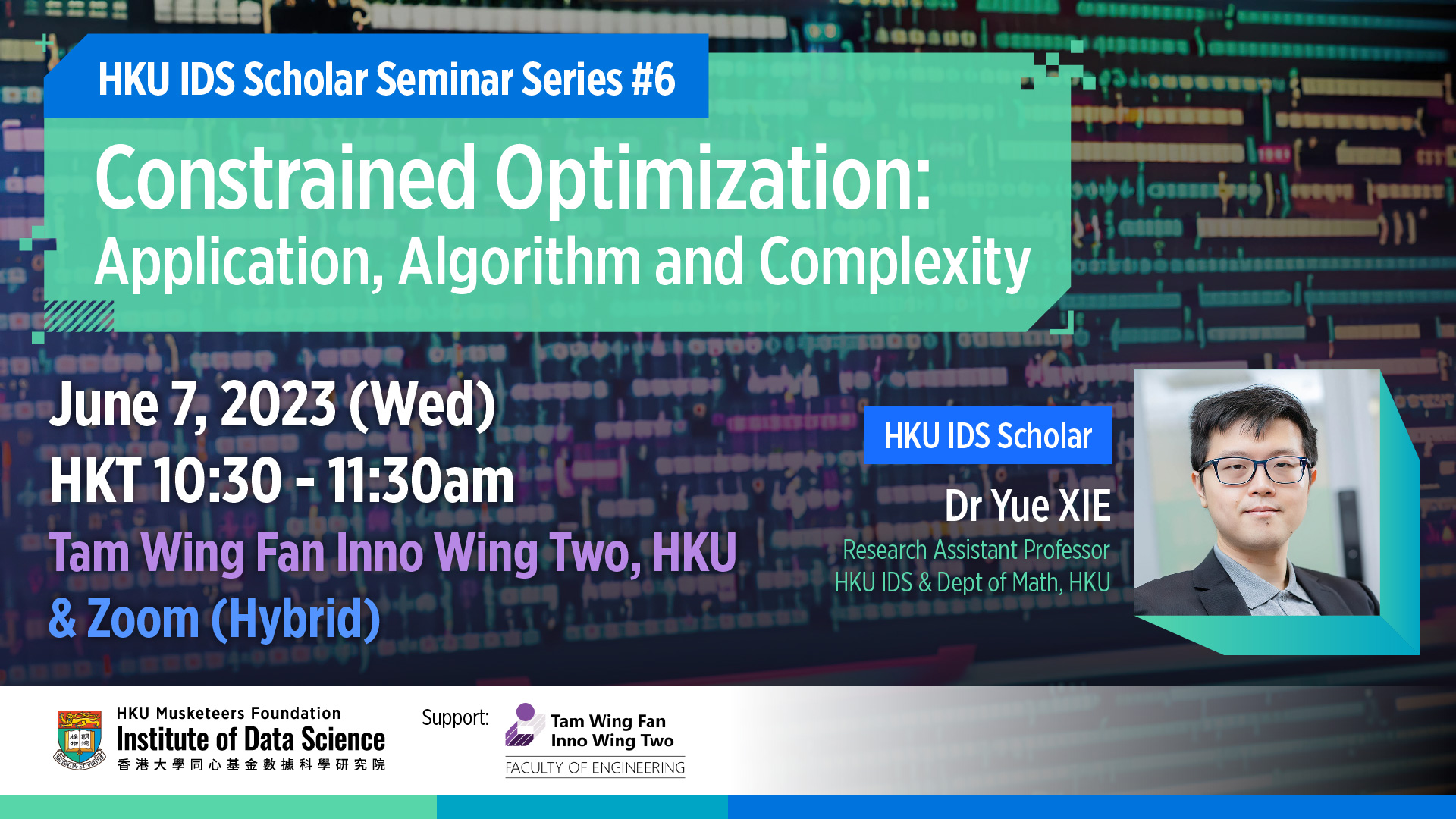

Dr. Yue Xie is a Research Assistant Professor in Musketeers Foundation Institute of Data Science (HKU-IDS) and Department of Mathematics at the University of Hong Kong. He was a postdoc at UW Madison working in the nonconvex optimization group led by Professor Stephen J. Wright. He received his PhD degree in Pennsylvania State University and Bachelor degree from Tsinghua University. Dr. Yue Xie has been focusing on algorithm design and analysis to address nonconvex and stochastic optimization problems with all types of applications including machine learning and data science. He has published/served as the referee of top-tier journals including Mathematical Programming, SIAM Journal on Optimization, and IEEE Transactions on Automatic Control, etc. He has delivered numerous presentations at major international conferences such as International Conference on Continuous Optimization (ICCOPT), International Symposium on Mathematical Programming (ISMP), SIAM Conference on Optimization and International Conference on Machine Learning (ICML). More details about him can be found at: https://yue-xie.github.io.

Computational optimal transport

Optimal transport (OT) problem is a mathematical problem of more than 200 years’ history. It is closely related to the basic concept – Wasserstein distance that compares probability distributions. This concept is widely used in image processing, decision science, and machine learning. However, how to efficiently solve the optimal transport problem is still an open question. To approximate the solution, people often formulate and solve a large-scale linear programming (LP) problem with special structure. We aim to design reliable and fast algorithms to solve this LP. These algorithms are expected to have competitive practical performance and/or theoretical guarantees compared to existing state-of-art algorithms for optimal transport, most of which are Sinkhorn based.

In our recent work, we consider random block coordinate descent (RBCD) methods to directly solve the related LP. In each iteration, we restrict the potential large-scale optimization problem to small LP subproblems constructed via randomly chosen working sets. Using an expected Gauss-Southwell-q rule to select these working sets, we equip a vanilla version with almost sure convergence and linear convergence rate in expectation to solve a general LP problem. By further exploring the special structure of constraints in the OT problems and leveraging the theory of linear systems, we proposed several approaches to refine the random working set selection and accelerate the vanilla method. Preliminary numerical experiments verify the acceleration effects, solution sparsity and demonstrate several merits of an accelerated random block coordinate descent over the Sinkhorn’s algorithm when seeking highly accurate solutions. (https://arxiv.org/abs/2212.07046)

Algorithm study for constrained optimization

Constrained optimization becomes increasingly useful in modelling applications in data science. For example, people add manifold constraints, nonnegative constraints or sparsity constraints for training tasks in machine learning and image processing; the minimax problem – math model of generative adversarial network (GAN), is also closely related to constrained optimization. We are interested in studying efficient algorithms to solve this problem class, including methods of multiplier, operator splitting methods, proximal point methods, primal dual methods and projection methods, etc. Due to the nonconvex nature of many modern optimization problems, first-order stationary solution is no longer satisfying, and we study how these classical methods can find solutions of higher-order stationarity efficiently and provide good complexity guarantees. In the context of big data, optimization problem is often complicated by expected-valued functions and finite sums, so stochastic methods assumes more relevance. Therefore, we are also engaged in investigating the convergence properties of these classical optimization methods in the stochastic setting.

- Yue Xie, Zhongjian Wang, and Zhiwen Zhang. “Random block coordinate descent methods for computing optimal transport and convergence analysis”. arXiv preprint, arXiv:2212.07046, https://arxiv.org/abs/2212.07046, (2022).

- Yue Xie and Stephen J. Wright. “Complexity of a Projected Newton-CG Method for Optimization with Bounds”. arXiv preprint, arXiv: 2103.15989. https://arxiv.org/abs/2103.15989, (2022).

- Yue Xie and Stephen J. Wright. “Complexity of proximal augmented Lagrangian for nonconvex optimization with nonlinear equality constraints.” Journal of Scientific Computing. https://link.springer.com/article/10.1007/s10915-021-01409-y, (2021).

- Yue Xie and Uday V. Shanbhag. “Tractable ADMM schemes for computing KKT points and local minimizers for l0-minimization problems.” Computational Optimization and Application. http://link.springer.com/article/10.1007/s10589-020-00227-6, (2020).

Nonconvex/stochastic optimization, Sparse learning, Dictionary learning, Robust optimization, Variational inequality

- Guangdong Province Fundamental and Applied Fundamental Research Regional Joint Fund, Project #2022B1515130009. “Optimization modelling methods and large-scale application demonstration of automatic analysis and auxiliary diagnosis of medical endoscope images (醫學內窺鏡圖像自動分析與輔助診斷的優化建模方法及大規模應用示範)”, Commence in Jan 2023, Co-Investigator (in collaboration with Sun Yat-Sen University).